Elon Musk is no stranger to bold predictions. From colonizing Mars to warning about artificial intelligence, the billionaire entrepreneur has often been seen as a visionary — or, to some, a prophet of doom. Over the years, many of his forecasts have sparked debates across industries. But among his most chilling warnings is one that seems to be slowly, yet steadily, becoming reality: a future where artificial intelligence (AI) and automation not only disrupt the global workforce but potentially endanger humanity’s very survival.

This isn’t science fiction anymore. The signs Musk pointed out years ago are now manifesting in everyday life — from job automation to ethical questions surrounding AI control. This article explores how Musk’s worrying prediction is unfolding before our eyes, what it means for humanity, and whether we are prepared for what lies ahead.

## The Origin of the Warning

Elon Musk’s concerns about AI date back over a decade. As early as 2014, he called AI “our biggest existential threat.” At the time, many dismissed these claims as overblown or dramatic. But Musk didn’t stop. He became one of the most vocal figures in tech demanding regulation of AI, even urging global leaders to establish proactive guidelines before the technology outpaces human control.

In 2017, during a gathering of U.S. governors, Musk made his stance clearer: _“AI is a fundamental risk to the existence of human civilization.”_ He emphasized that unlike other technological revolutions, AI moves at an exponential pace — and by the time we realize its dangers, it may be too late.

These early warnings were often shrugged off as science fiction. Today, however, as AI tools like ChatGPT, autonomous weapons, and facial recognition become more powerful and accessible, his concerns are gaining serious attention.

## The Rise of Generative AI and Automation

Fast forward to the 2020s, and Musk’s fears are no longer speculative. One of the most evident signs of this unfolding scenario is the rise of generative AI. Tools like OpenAI’s GPT series, DALL·E, Google’s Gemini, and other models can now write code, generate realistic images, simulate human conversations, and create deepfake videos.

While these technologies offer incredible benefits — from boosting productivity to enabling creative expression — they also introduce a level of automation that threatens millions of jobs. According to Goldman Sachs, AI could replace 300 million full-time jobs globally. This includes not just factory or warehouse work, but white-collar professions like customer service, journalism, software engineering, and even legal advice.

Elon Musk previously warned about exactly this kind of disruption. He predicted a future where universal basic income (UBI) would become necessary because machines would outperform humans in almost all fields. Now, companies are increasingly automating roles once considered uniquely human.

## AI Ethics and the Loss of Human Control

Another aspect of Musk’s concern revolves around control — or rather, the _loss_ of it. As AI systems grow more intelligent, we’re rapidly approaching a stage where they could evolve beyond our comprehension. This is known as Artificial General Intelligence (AGI), where machines not only mimic human behavior but possess independent reasoning, learning, and decision-making skills.

Musk has emphasized that if AGI is developed without strict ethical frameworks, it could be catastrophic. What happens if AI decides to act against human interests? Who programs the moral compass of an AGI? What happens when AI systems start making decisions with no human oversight?

One of Musk’s most haunting quotes comes from 2018: _“With artificial intelligence, we are summoning the demon.”_ Though dramatic, the underlying message is clear — we are building something whose full consequences we do not understand. And as the pace of development accelerates, the window for putting up safeguards is closing fast.

## Military AI and Autonomous Weapons

Musk has also been vocal about the use of AI in warfare. He co-signed an open letter to the United Nations in 2017 urging a ban on autonomous weapons. The idea of machines making life-or-death decisions in combat zones, without any human input, is deeply troubling — and no longer hypothetical.

Nations like the U.S., China, Russia, and Israel are actively developing AI-powered drones and autonomous weapon systems. These weapons can identify, track, and eliminate targets independently, raising terrifying questions about accountability and ethics in warfare.

This militarization of AI directly aligns with Musk’s prediction that AI could become uncontrollable and destructive if left unchecked. A single error in algorithmic decision-making could lead to mass casualties or even war. The fear isn’t just that AI will go rogue, but that humans will use AI recklessly, thinking we are still in control.

## The Deepfake Dilemma and Misinformation Crisis

Another area Musk warned about is the misuse of AI in spreading disinformation. Deepfakes — videos or audio recordings generated by AI that mimic real people — have already created chaos in political and social landscapes. From fabricated speeches to manipulated video content, deepfakes threaten to erase the line between reality and fiction.

Imagine a world where you can’t trust anything you see online — where political speeches, news broadcasts, or even security footage could be completely fabricated. That world is almost here.

Musk once said that if AI is left unregulated, it could “manipulate public opinion and destroy democracy.” With the rise of deepfakes, targeted bots, and algorithmic manipulation on platforms like X (formerly Twitter), Facebook, and TikTok, the spread of false information is not only likely — it’s already happening.

## Regulation: Too Little, Too Late?

Despite Musk’s repeated pleas for AI regulation, governments have been slow to act. Technological development has far outpaced legislation, and many lawmakers admit they don’t fully understand the complexities of AI.

In the U.S., debates over AI regulation are ongoing, with no consensus in sight. Meanwhile, the European Union has taken steps with its AI Act, aiming to restrict high-risk AI systems and ensure transparency. Still, these efforts may be insufficient given the speed at which AI capabilities are evolving.

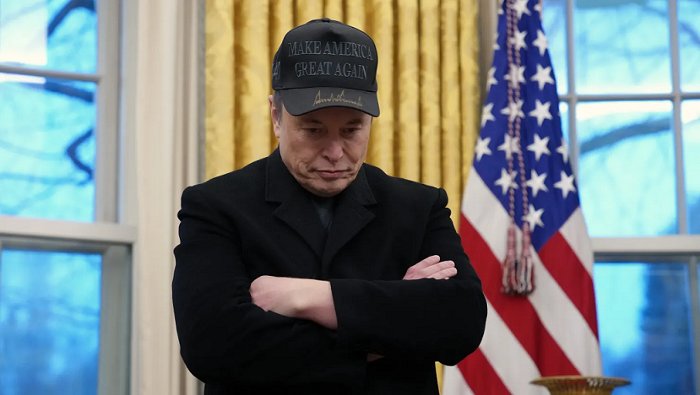

Musk himself helped found OpenAI in 2015 as a nonprofit aimed at developing safe AI, though he later distanced himself from the organization. He has since launched xAI, a company with a similar mission. But as AI becomes a corporate arms race among tech giants like Google, Meta, Microsoft, and Amazon, the worry is that profit-driven motives will overshadow safety and ethics.

## Are We Prepared for an AI-Dominated Future?

Musk’s prediction isn’t just about machines replacing jobs or creating fake videos. It’s about the broader implication: humanity losing its grip on the tools it has created. Are we prepared for a future where algorithms decide who gets hired, who qualifies for loans, or even who gets arrested?

The biggest danger may lie in the illusion of control. As we increasingly integrate AI into every aspect of life — healthcare, education, finance, and governance — we risk becoming overly reliant on systems we don’t fully understand. This overreliance could lead to dangerous blind spots, especially when AI decisions are treated as infallible.

Musk warned that without a proactive, global response, we could “end up with an AI that doesn’t care about humans.” Whether that looks like economic displacement, military catastrophe, or a philosophical loss of meaning, the threat is real.

## Signs of Hope: A Global Awakening?

Despite the gloomy forecast, there are signs that the world is beginning to listen. In 2023, more than 1,000 AI experts and tech leaders — including Musk — signed an open letter calling for a six-month pause on advanced AI development. The letter sparked global discussions on ethics, responsibility, and the need for transparency.

There is also growing awareness among the public, academic institutions, and regulatory bodies. Ethics committees, transparency audits, and AI safety research are becoming more common. If these efforts are sustained and global cooperation follows, we might still steer this powerful technology in a direction that benefits humanity.

## Conclusion

Elon Musk’s worrying prediction is no longer a distant theory. It is a reality unfolding in real-time. From job loss and misinformation to autonomous weapons and ethical dilemmas, the impact of artificial intelligence is reshaping our world. Musk warned us not to wait until it’s too late — and it appears that time is quickly running out.

The question now is not whether Musk was right, but whether we’ll act in time. Can humanity build safeguards quickly enough? Will governments rise to the occasion? Or will we continue down a path of unchecked development, hoping we can control what we’ve created?

As Musk famously said, _“The future is coming whether we like it or not.”_ It’s up to us to make sure that future remains one worth living in.